Blog Posts

How vulnerable is our critical national infrastructure?

02 04, 15

As originally published on Help Net Security:

Considered the backbone of the nation’s economy, security and health; critical infrastructure provides power, water, transportation, and communications systems relied on to connect us with our friends and family to our communities.

Utility, oil and gas, manufacturing and alternative energy organizations are fending off cyber attacks on a daily basis. From activist groups to state-sponsored hackers, our nations’ critical infrastructures are targeted regularly in an attempt to disrupt services and cause havoc.

Information technology has evolved significantly in just the past decade, yet most critical infrastructure technology is based on embedded hardware and proprietary protocols that predate the Internet. Years ago, systems were largely isolated with operation managers onsite, as opposed to connecting in from remote offices or even while on the road - there was no need to connect to a corporate network or Internet and the security models of many of these systems reflects these simpler times.

In an attempt to streamline business, improve communication in the supply chain and stay current with technology trends, such as Big Data and the Internet of Things, these organizations have been and are connecting their critical control systems to open and often public networks.

Unfortunately the networks may not be as secure as believed, exposing companies to an abundance of cyber attacks and vulnerabilities. The once obscure proprietary protocols used by industrial control systems have been dissected and analyzed with the results spread across the Internet for any interested party to peruse, researchers (both those looking to help make the Internet more secure and those looking to defeat its security) are actively looking for vulnerabilities, and dedicated search engines like Shodan allow potential attackers to quickly find systems that are vulnerable to their latest exploit (even though Google often works in a pinch as well). Despite the well-publicized attacks (and the ones never made public) in recent years, security isn’t being seen as a priority for many of the organizations that form our critical infrastructure.

Cybercrime is forcing companies of all sizes in all sectors to take notice; the threat of a cyber attack has serious repercussions that reach far beyond the companies’ business to the individuals who rely on the services of these organizations for their day-to-day needs. A pair of research papers by Trend Micro show how common attacks on critical infrastructure systems have become, who is behind them, and the types of damage these attackers are willing to cause, even with no apparent motive.

In the extreme case, Stuxnet and its descendants have shown us the damage a motivated state attacker can cause. Thirty years ago, physical threats were the biggest concern to critical infrastructure, and today, a cyber attack that isn’t easily attributable to a specific actor poses the greatest threat. It is key that the critical infrastructure maintains reliable functioning.

How can critical infrastructure organizations manage to stay up to date with technology while protecting their company from a security breach?

Cyber security standards and guidelines already exist and in many cases, have been in place for years, yet reported attacks continue to grow and many could have been avoided. With the growing awareness globally of the threat of cyber attacks against critical infrastructure system, guidelines and framework are exactly that – guidelines and suggestions to follow, rather than legal requirements to comply with. In many cases these guidelines will only provide a bare minimum, failing to address the additional risks posed by a specific organization’s architectural design choices.

It still remains the responsibility of the industry to continuously monitor and control its own systems and IT environments. Additionally, due to how connected critical infrastructure systems have become to the broader corporate network, all employees, not just IT employees, need to be educated and trained to do everything possible to reduce the risk of a cyber attack.

Sitting tight and hoping for the best is not an option. The risk of a cyber attack isn’t going away and critical systems are not becoming less vulnerable to attack. To control the risk, an organization must understand the current risk exposure across all area of the business and focus on the critical areas.

To mitigate a security breach, reputation damage and financial loss – a detailed incident response plan is essential. A timely implementation of an incident response is imperative post breach, and having an in-house skilled security expert on call 24x7 may not be an option for many companies as there is a growing global skills shortage in this industry that will likely take years to improve. Many organizations outsource these critical functions, reassuring companies that their systems are monitored around the clock with security experts on hand providing crucial support when needed.

It’s clear that critical infrastructures are under scrutiny from both attackers and defenders. Organizations need to understand their cyber security efforts and where improvements can be made, allowing them to identify and fix the weaknesses in their infrastructure. The industry needs to take control of the issue and find ways to reduce the growing number of threats by building systems that bake in security as part of the design and thereby reduce the number of exploitable vulnerabilities. Until that day arrives, organizations need to remain attentive to protect its assets.

In order to reduce high-risk situations, these ten steps will help improve security controls:

1. Understand your risk - an annual risk assessment exercise should be conducted with an expert who has conducted similar technical risk assessments in order to identify the risks that baseline security and compliance standards don’t cover and determine what level of security is appropriate for a particular system.

2. Secure configuration - keep software and hardware up to date, persistence always pays off. Work with suppliers to ensure proprietary systems are maintained, and build an asset register with a focus on end-of-life/unsupported systems that will require extra protection.

3. Aim for real-time detection - continuously monitor all log data generated by the organization’s IT system to keep track of what strays from “normal” activity and be prepared to respond immediately to any perceived issue. This will likely include a combination of IPS, DLP, FIM, and SIEM solutions working together to provide deep visibility.

4. Educate and train your employees - make sure they really understand your policies, procedures, and incident response processes. Make it a priority to teach everyone at least the basics.

5. Check passwords on connected devices - make sure the devices aren’t using weak passwords that are easily hacked. Default passwords for even obscure products are well known and documented on the Internet, attackers will try these first. Commonly used or otherwise simple passwords aren’t much better.

6. Incident response - establish, produce and routinely test incident management plans to ensure that there is an effective response to maintain business continuity in the face of a breach.

7. Secure network - manage the external and internal network perimeters to filter out unauthorized access. It is key to understand what is on your network and what protocols traverse it. This can’t be accomplished if critical systems share a “flat” network with other unrelated systems with unrestricted internal access. When feasible, completely disconnect critical networks from the Internet and other networks to eliminate the possibility of remote attacks.

8. Malware protection - establish anti-malware defenses and continuously scan for malware. While this won’t stop every attack and shouldn’t be relied on it can provide an early warning of a sloppy attacker.

9. Test security – Regular penetration tests should be conducted in order to identify weaknesses and test the effectiveness of other security controls. These should go beyond basic vulnerability scans and include hands-on attempts to exploit vulnerabilities conducted by testers who are familiar with the techniques necessary to attack industrial control systems.

10. Pay attention to new threats – New vulnerabilities arise regularly, whether it is a simple exploit discovered in a particular product or an entirely new way of manipulating a common protocol that affects a wide range of products dating back years (as we have seen with a number of SSL vulnerabilities lately). All of the policies, procedures, risk assessments, and security controls should constantly be updated to address these latest threats as they are discovered rather than waiting until they are exploited when it is often too late.

As critical infrastructure companies become more connected to the Internet, they are placed under high scrutiny from cyber attackers. It is vital for organizations to recognize where they stand in their cyber security efforts and pinpoint where there are weaknesses in their infrastructure. It is extremely important for companies to be prepared for cyber threats and attacks, and aware of the repercussions, not only on them but also for those who rely on them on a daily basis.

Considered the backbone of the nation’s economy, security and health; critical infrastructure provides power, water, transportation, and communications systems relied on to connect us with our friends and family to our communities.

Utility, oil and gas, manufacturing and alternative energy organizations are fending off cyber attacks on a daily basis. From activist groups to state-sponsored hackers, our nations’ critical infrastructures are targeted regularly in an attempt to disrupt services and cause havoc.

Information technology has evolved significantly in just the past decade, yet most critical infrastructure technology is based on embedded hardware and proprietary protocols that predate the Internet. Years ago, systems were largely isolated with operation managers onsite, as opposed to connecting in from remote offices or even while on the road - there was no need to connect to a corporate network or Internet and the security models of many of these systems reflects these simpler times.

In an attempt to streamline business, improve communication in the supply chain and stay current with technology trends, such as Big Data and the Internet of Things, these organizations have been and are connecting their critical control systems to open and often public networks.

Unfortunately the networks may not be as secure as believed, exposing companies to an abundance of cyber attacks and vulnerabilities. The once obscure proprietary protocols used by industrial control systems have been dissected and analyzed with the results spread across the Internet for any interested party to peruse, researchers (both those looking to help make the Internet more secure and those looking to defeat its security) are actively looking for vulnerabilities, and dedicated search engines like Shodan allow potential attackers to quickly find systems that are vulnerable to their latest exploit (even though Google often works in a pinch as well). Despite the well-publicized attacks (and the ones never made public) in recent years, security isn’t being seen as a priority for many of the organizations that form our critical infrastructure.

Cybercrime is forcing companies of all sizes in all sectors to take notice; the threat of a cyber attack has serious repercussions that reach far beyond the companies’ business to the individuals who rely on the services of these organizations for their day-to-day needs. A pair of research papers by Trend Micro show how common attacks on critical infrastructure systems have become, who is behind them, and the types of damage these attackers are willing to cause, even with no apparent motive.

In the extreme case, Stuxnet and its descendants have shown us the damage a motivated state attacker can cause. Thirty years ago, physical threats were the biggest concern to critical infrastructure, and today, a cyber attack that isn’t easily attributable to a specific actor poses the greatest threat. It is key that the critical infrastructure maintains reliable functioning.

How can critical infrastructure organizations manage to stay up to date with technology while protecting their company from a security breach?

Cyber security standards and guidelines already exist and in many cases, have been in place for years, yet reported attacks continue to grow and many could have been avoided. With the growing awareness globally of the threat of cyber attacks against critical infrastructure system, guidelines and framework are exactly that – guidelines and suggestions to follow, rather than legal requirements to comply with. In many cases these guidelines will only provide a bare minimum, failing to address the additional risks posed by a specific organization’s architectural design choices.

It still remains the responsibility of the industry to continuously monitor and control its own systems and IT environments. Additionally, due to how connected critical infrastructure systems have become to the broader corporate network, all employees, not just IT employees, need to be educated and trained to do everything possible to reduce the risk of a cyber attack.

Sitting tight and hoping for the best is not an option. The risk of a cyber attack isn’t going away and critical systems are not becoming less vulnerable to attack. To control the risk, an organization must understand the current risk exposure across all area of the business and focus on the critical areas.

To mitigate a security breach, reputation damage and financial loss – a detailed incident response plan is essential. A timely implementation of an incident response is imperative post breach, and having an in-house skilled security expert on call 24x7 may not be an option for many companies as there is a growing global skills shortage in this industry that will likely take years to improve. Many organizations outsource these critical functions, reassuring companies that their systems are monitored around the clock with security experts on hand providing crucial support when needed.

It’s clear that critical infrastructures are under scrutiny from both attackers and defenders. Organizations need to understand their cyber security efforts and where improvements can be made, allowing them to identify and fix the weaknesses in their infrastructure. The industry needs to take control of the issue and find ways to reduce the growing number of threats by building systems that bake in security as part of the design and thereby reduce the number of exploitable vulnerabilities. Until that day arrives, organizations need to remain attentive to protect its assets.

In order to reduce high-risk situations, these ten steps will help improve security controls:

1. Understand your risk - an annual risk assessment exercise should be conducted with an expert who has conducted similar technical risk assessments in order to identify the risks that baseline security and compliance standards don’t cover and determine what level of security is appropriate for a particular system.

2. Secure configuration - keep software and hardware up to date, persistence always pays off. Work with suppliers to ensure proprietary systems are maintained, and build an asset register with a focus on end-of-life/unsupported systems that will require extra protection.

3. Aim for real-time detection - continuously monitor all log data generated by the organization’s IT system to keep track of what strays from “normal” activity and be prepared to respond immediately to any perceived issue. This will likely include a combination of IPS, DLP, FIM, and SIEM solutions working together to provide deep visibility.

4. Educate and train your employees - make sure they really understand your policies, procedures, and incident response processes. Make it a priority to teach everyone at least the basics.

5. Check passwords on connected devices - make sure the devices aren’t using weak passwords that are easily hacked. Default passwords for even obscure products are well known and documented on the Internet, attackers will try these first. Commonly used or otherwise simple passwords aren’t much better.

6. Incident response - establish, produce and routinely test incident management plans to ensure that there is an effective response to maintain business continuity in the face of a breach.

7. Secure network - manage the external and internal network perimeters to filter out unauthorized access. It is key to understand what is on your network and what protocols traverse it. This can’t be accomplished if critical systems share a “flat” network with other unrelated systems with unrestricted internal access. When feasible, completely disconnect critical networks from the Internet and other networks to eliminate the possibility of remote attacks.

8. Malware protection - establish anti-malware defenses and continuously scan for malware. While this won’t stop every attack and shouldn’t be relied on it can provide an early warning of a sloppy attacker.

9. Test security – Regular penetration tests should be conducted in order to identify weaknesses and test the effectiveness of other security controls. These should go beyond basic vulnerability scans and include hands-on attempts to exploit vulnerabilities conducted by testers who are familiar with the techniques necessary to attack industrial control systems.

10. Pay attention to new threats – New vulnerabilities arise regularly, whether it is a simple exploit discovered in a particular product or an entirely new way of manipulating a common protocol that affects a wide range of products dating back years (as we have seen with a number of SSL vulnerabilities lately). All of the policies, procedures, risk assessments, and security controls should constantly be updated to address these latest threats as they are discovered rather than waiting until they are exploited when it is often too late.

As critical infrastructure companies become more connected to the Internet, they are placed under high scrutiny from cyber attackers. It is vital for organizations to recognize where they stand in their cyber security efforts and pinpoint where there are weaknesses in their infrastructure. It is extremely important for companies to be prepared for cyber threats and attacks, and aware of the repercussions, not only on them but also for those who rely on them on a daily basis.

Hacking the Hackers: The Legal Risks of Taking Matters Into Private Hands

23 09, 14

Following up from an incident that Microsoft caused a while back trying to close down some malware, Becca Lipman at Wall Street & Technology has posted a great article on the difficult issues faced by financial institutions trying to protect themselves from relentless hackers that are hiding in countries where they can’t be served with justice.

Three critical changes to PCI DSS 3.0 that every merchant should know

23 09, 14

The strategic concerns of security in cloud computing Read More...

Chip & Pain, EMV Will Not Solve Payment Card Fraud

18 09, 14

As published in Wall Street and Technology:

Switching to EMV cards will lower retail fraud, but it's not enough. Here's the good, the bad, and the ugly.

Home Depot, much like Target before it, has responded to its breach with a press release indicating that it will be rolling out Chip and PIN technology. While this is a positive step, it is also a bit of a red herring: Chip and PIN technology alone would have done little to nothing to prevent these breaches.

Chip and PIN is one piece of a larger standard called EMV. This standard defines how chip cards interoperate with point-of-sale terminals and ATMs. It includes the Chip and PIN functionality that we hear so much about as well as Chip and Sign functionality that seems more likely to get rolled out in the US. EMV is not without its flaws.

It's all about the money

The card brands are pushing for EMV to be in place by October 2015 with gas pumps and ATMs allowed an extension until October 2017. The mechanism by which this is being accomplished is a liability shift.

In the US today the bank or card brand is typically responsible for most fraud losses. When the deadlines pass the acquirers will be transferring liability for fraud losses down to whoever isn't using EMV technology. For example, if fraud is committed with an EMV card at a merchant that only supports stripe cards then the merchant will be liable.

The good

The advantage of an EMV card is that the chip is much harder to clone than a magnetic stripe.

The magnetic stripes are like miniature tape cassettes that can easily be overwritten with stolen data while chips are more like miniature computers that cryptographically validate themselves. The chips are not supposed to give up the secret keys that would be necessary in order to create a clone.

Chip and PIN cards also make it more difficult to steal and use a physical card. The thief would need to know the PIN to use the stolen card.

The bad

So far banks in the US are rolling out Chip and Sign cards due to fears about consumer acceptance of PINs. With Chip and Sign it remains possible for a thief to steal a physical card and make a purchase at any store by drawing a squiggle on a piece of paper.

There are deeper problems with the transition though. Not every merchant or bank will support EMV right away so both EMV cards and terminals will continue to support magnetic stripes. Stripe data stolen from a non-EMV merchant can still be used for fraud and unless terminals enforce the use of cards in EMV mode this opens the door to stolen card data being used in magnetic stripe mode regardless of its source.

The ugly

The chip helps verify that the card is legitimate but most EMV terminals read the unencrypted card details off of the chip in nearly the same way that a magnetic stripe terminal reads them now. A compromised point-of-sale terminal could still skim off card details that could be used for fraud elsewhere.

Security researchers have also identified a few different techniques for capturing PINs and an attack that allows an incorrect PIN to be used successfully. EMV terminals are also not immune from people tampering with the terminals themselves, including in the supply chain, and this has already resulted in some real-world breaches.

E-commerce still relies on punching a card number into a website. EMV offers no protection here, cards could be stolen from compromised e-commerce servers and stolen card data could be used to make online purchases.

What, if not EMV?

EMV does lower retail fraud where it is used today because it's easier to steal cards and commit fraud in another geography where EMV is not in use. As other sources of card data dry up we can expect the flaws in EMV that we already know about will be exploited more widely and new exploits will be found. Before too long we will end up right back where we are today.

The real solution to the retail breaches we've been seeing is encryption. By the time the card data gets to the point-of-sale terminal it's too late. Encryption should happen as close to the card as possible, this means in the terminal hardware as the card is read. In this model the only realistic attack a merchant would have to be concerned with is tampering with the terminal hardware itself.

PCI has published the Point-to-Point-Encryption (P2PE) standard to standardize this approach but most merchants are focusing on the migration to EMV instead. I'm afraid that soon after the shift to EMV is complete we will find ourselves making another forced migration to P2PE. Either that or consumers and merchants begin their own migration to alternative payment technologies.

Switching to EMV cards will lower retail fraud, but it's not enough. Here's the good, the bad, and the ugly.

Home Depot, much like Target before it, has responded to its breach with a press release indicating that it will be rolling out Chip and PIN technology. While this is a positive step, it is also a bit of a red herring: Chip and PIN technology alone would have done little to nothing to prevent these breaches.

Chip and PIN is one piece of a larger standard called EMV. This standard defines how chip cards interoperate with point-of-sale terminals and ATMs. It includes the Chip and PIN functionality that we hear so much about as well as Chip and Sign functionality that seems more likely to get rolled out in the US. EMV is not without its flaws.

It's all about the money

The card brands are pushing for EMV to be in place by October 2015 with gas pumps and ATMs allowed an extension until October 2017. The mechanism by which this is being accomplished is a liability shift.

In the US today the bank or card brand is typically responsible for most fraud losses. When the deadlines pass the acquirers will be transferring liability for fraud losses down to whoever isn't using EMV technology. For example, if fraud is committed with an EMV card at a merchant that only supports stripe cards then the merchant will be liable.

The good

The advantage of an EMV card is that the chip is much harder to clone than a magnetic stripe.

The magnetic stripes are like miniature tape cassettes that can easily be overwritten with stolen data while chips are more like miniature computers that cryptographically validate themselves. The chips are not supposed to give up the secret keys that would be necessary in order to create a clone.

Chip and PIN cards also make it more difficult to steal and use a physical card. The thief would need to know the PIN to use the stolen card.

The bad

So far banks in the US are rolling out Chip and Sign cards due to fears about consumer acceptance of PINs. With Chip and Sign it remains possible for a thief to steal a physical card and make a purchase at any store by drawing a squiggle on a piece of paper.

There are deeper problems with the transition though. Not every merchant or bank will support EMV right away so both EMV cards and terminals will continue to support magnetic stripes. Stripe data stolen from a non-EMV merchant can still be used for fraud and unless terminals enforce the use of cards in EMV mode this opens the door to stolen card data being used in magnetic stripe mode regardless of its source.

The ugly

The chip helps verify that the card is legitimate but most EMV terminals read the unencrypted card details off of the chip in nearly the same way that a magnetic stripe terminal reads them now. A compromised point-of-sale terminal could still skim off card details that could be used for fraud elsewhere.

Security researchers have also identified a few different techniques for capturing PINs and an attack that allows an incorrect PIN to be used successfully. EMV terminals are also not immune from people tampering with the terminals themselves, including in the supply chain, and this has already resulted in some real-world breaches.

E-commerce still relies on punching a card number into a website. EMV offers no protection here, cards could be stolen from compromised e-commerce servers and stolen card data could be used to make online purchases.

What, if not EMV?

EMV does lower retail fraud where it is used today because it's easier to steal cards and commit fraud in another geography where EMV is not in use. As other sources of card data dry up we can expect the flaws in EMV that we already know about will be exploited more widely and new exploits will be found. Before too long we will end up right back where we are today.

The real solution to the retail breaches we've been seeing is encryption. By the time the card data gets to the point-of-sale terminal it's too late. Encryption should happen as close to the card as possible, this means in the terminal hardware as the card is read. In this model the only realistic attack a merchant would have to be concerned with is tampering with the terminal hardware itself.

PCI has published the Point-to-Point-Encryption (P2PE) standard to standardize this approach but most merchants are focusing on the migration to EMV instead. I'm afraid that soon after the shift to EMV is complete we will find ourselves making another forced migration to P2PE. Either that or consumers and merchants begin their own migration to alternative payment technologies.

Driving Information Security, From Silicon Valley to Detroit

20 08, 14

As published in Wall Street and Technology:

For better or worse, computer software vendors are practically devoid of any liability for vulnerabilities in the software they sell (although there is certainly a heated discussion on this topic). As far as vendors are concerned, software is “licensed” rather than sold, and users who accept those licenses are agreeing to waive certain rights, including the right to collect damages resulting from failures in the software.

To pull one particular example from the license for Microsoft SQL Server Enterprise 2012, a widely used piece of database software that underpins a significant number of enterprise applications that handle millions of dollars worth of transactions each:

When a flaw is discovered, including security flaws that are actively being exploited to breach systems, a vendor will typically issue a patch (sometimes many months later, and, hopefully without causing more problems than they fix), and that is the end of the issue: no lawsuits, no refunds, and no damages.

This liability-free model used by software vendors stands in stark contrast to almost any other product that is bought and sold. Product liability laws hold manufacturers and sellers responsible for design or manufacturing defects in their products. Rather than releasing a fix and calling it a day, these companies will find themselves on the hook financially for the consequences of their failures.

Most consumer products also don’t receive the frequent software updates that we are used to applying to our computers; whatever software version comes in a consumer product tends to stay in it for life. In the automotive world this has already led to some comically outdated in-dash navigation, information, and entertainment systems (especially when compared to today's rapidly evolving smartphones and tablets) but will also likely lead to some horribly vulnerable unpatched software.

These two worlds, both operating under very different rules, are colliding. Cutting-edge computers and software are increasingly finding their way into the types of products we buy every day, and nowhere is this more apparent than in the automotive world. The days of carbureted vehicles that could be tuned with a timing light and a screwdriver ended in the 1990s, replaced with fuel injection and electronic ignition systems that are controlled by computers actively adjusting engine parameters as we drive, based on the readings from a network of sensors scattered throughout the vehicle. These networks have grown to include more than just the engine sensors.

In-car networking standards, such as the CAN bus standard, enable a wide array of devices within a vehicle to communicate with each other, allowing huge wiring harnesses containing hundreds of bundled wires, fuses, and switches to be replaced with commands and updates traveling over a single wire. On modern cars the brakes may not be controlled by a hydraulic line connected to the brake pedal; the throttle may not be controlled by a cable connected to the gas pedal; and the steering may not be controlled by a shaft connected to the steering wheel. Instead, the brake pedal, gas pedal, and steering wheel could all just be electronic sensors that send computerized commands over the CAN bus network to electric motors elsewhere in the vehicle that carry out those commands. Toyota’s electronic throttle control system has already made some headlines as part of a series of unintended acceleration lawsuits that resulted in 16 deaths, 243 injuries, a motorist released from jail, and a $1.2 billion fine.

This issue goes much deeper than the types of software mistakes that can cause a car to malfunction on its own. As we’ve seen with much of the software connected to the Internet, including some other systems that can have real-world (and sometimes very messy) consequences, it is the malicious hackers that can cause the most problems. Security researchers have already been looking into these sorts of possibilities and have separately demonstrated the ability to gain access to in-car networks from a remote location and affect a vehicle’s braking, steering, and acceleration (among other things) once they gain access to the in-car network.

Other attacks like location tracking and eavesdropping on a vehicle’s occupants via hands-free communication microphones are also possible, but they pale in comparison to the potentially fatal consequences of interference with the vehicle controls. Presentations at the annual Black Hat Conference and DEF CON security conferences this month have also covered topics related to automotive network and computer security, while a group in China is offering a prize of $10,000 to anyone who can gain remote access to a Tesla’s on-board operating system.

Although some of the media reports on this topic are being dismissed within the information security community as “stunt hacking” (sensationalist stories based on hacks conducted in unrealistic conditions) and manufacturers are quick to state that their car systems have safety checks built in, it is clear that the building blocks for a real-world attack are being built and assembled. The firmware manipulation techniques demonstrated at DEF CON earlier this month could be used to override or eliminate the safety checks built in by the manufacturers, and it is only a matter of time before the techniques that are being used to remotely access cars are combined with the techniques to manipulate the controls.

All of these attack vectors would require the attacker to be in or near the target vehicle, but services like GM’s OnStar, BMW’s Assist, and others utilize mobile cellular connections to connect vehicles to the outside world. New smartphone apps that allow vehicle owners to interface with their cars remotely can open up these interfaces essentially to anyone on the Internet. It’s not too far-fetched to imagine that a few years from now bored Chinese hackers could spend their downtime crashing cars instead of trying to cause trouble at water treatment plants.

Motor vehicles have been built with mechanical and hydraulic linkages for over a century, and the basic safety principles for those types of systems are well understood. Designing reliable software for complex vehicles is a fairly new discipline that is only understood by a few companies (and even they make mistakes). Malfunctions or outside interference with operating vehicles can easily have fatal consequences, and the increasing use of networked control systems connected to the outside world increases the likelihood of accidental or malicious incidents.

The developers of the electronic systems in our vehicles would do well to heed the the saying “with great power comes great responsibility.” As we’ve seen with both Toyota and GM’s recent troubles, safety issues can bring heavy financial consequences for manufacturers. Congress is starting to pay attention to the issue of car hacking as well, and it will likely only take one high-profile incident to provoke regulatory action.

Tesla Motors has already shaken up the industry by bringing its Silicon Valley approach to the automobile business and continues with this approach by actively soliciting information from the public on security vulnerabilities in its vehicles and publicly posting a “Hall of Fame” for security researchers who have assisted them. Perhaps this is part of the future, manufacturers working closer with their customers to find and address issues.

As Google experiments with some of the first realistic self-driving cars, it isn’t too far fetched to imagine them following the same path as Tesla when it comes to working with security researchers, especially in light of Google’s existing bug bounty programs. In any case, one habit of Silicon Valley that we can be almost assured won’t carry over to the automotive world is the practice of disclaiming liability for damages from the improper operation of software; the Toyota case has shown us that those days are already over. Who knows? Before long, it may be Silicon Valley looking to Detroit for advice on how to handle product liability concerns.

As a footnote, many of the issues raised here are applicable to other industries outside the automotive sector as well (software vulnerabilities in medical devices and industrial control systems have been getting quite a bit of attention as of late). But it’s hard to imagine any other industry that is as integral to the national (and global) economy, whose products are used more frequently by such a large proportion of the population, and the correct operation of which carries life-and-death consequences.

For better or worse, computer software vendors are practically devoid of any liability for vulnerabilities in the software they sell (although there is certainly a heated discussion on this topic). As far as vendors are concerned, software is “licensed” rather than sold, and users who accept those licenses are agreeing to waive certain rights, including the right to collect damages resulting from failures in the software.

To pull one particular example from the license for Microsoft SQL Server Enterprise 2012, a widely used piece of database software that underpins a significant number of enterprise applications that handle millions of dollars worth of transactions each:

YOU CAN RECOVER FROM MICROSOFT AND ITS SUPPLIERS ONLY DIRECT DAMAGES UP TO THE AMOUNT YOU PAID FOR THE SOFTWARE... YOU CANNOT RECOVER ANY OTHER DAMAGES, INCLUDING CONSEQUENTIAL, LOST PROFITS, SPECIAL, INDIRECT OR INCIDENTAL DAMAGES.

When a flaw is discovered, including security flaws that are actively being exploited to breach systems, a vendor will typically issue a patch (sometimes many months later, and, hopefully without causing more problems than they fix), and that is the end of the issue: no lawsuits, no refunds, and no damages.

This liability-free model used by software vendors stands in stark contrast to almost any other product that is bought and sold. Product liability laws hold manufacturers and sellers responsible for design or manufacturing defects in their products. Rather than releasing a fix and calling it a day, these companies will find themselves on the hook financially for the consequences of their failures.

Software infiltrates everything

Government oversight from organizations like the Consumer Product Safety Commission, the National Highway Traffic Safety Administration, and the Food and Drug Administration track complaints and have the ability to force recalls or issue fines. For a recent example of these consequences we can look to General Motors’ ignition recall troubles that have so far resulted in $2.5 billion worth of recalls, fines, and compensation funds.Most consumer products also don’t receive the frequent software updates that we are used to applying to our computers; whatever software version comes in a consumer product tends to stay in it for life. In the automotive world this has already led to some comically outdated in-dash navigation, information, and entertainment systems (especially when compared to today's rapidly evolving smartphones and tablets) but will also likely lead to some horribly vulnerable unpatched software.

These two worlds, both operating under very different rules, are colliding. Cutting-edge computers and software are increasingly finding their way into the types of products we buy every day, and nowhere is this more apparent than in the automotive world. The days of carbureted vehicles that could be tuned with a timing light and a screwdriver ended in the 1990s, replaced with fuel injection and electronic ignition systems that are controlled by computers actively adjusting engine parameters as we drive, based on the readings from a network of sensors scattered throughout the vehicle. These networks have grown to include more than just the engine sensors.

In-car networking standards, such as the CAN bus standard, enable a wide array of devices within a vehicle to communicate with each other, allowing huge wiring harnesses containing hundreds of bundled wires, fuses, and switches to be replaced with commands and updates traveling over a single wire. On modern cars the brakes may not be controlled by a hydraulic line connected to the brake pedal; the throttle may not be controlled by a cable connected to the gas pedal; and the steering may not be controlled by a shaft connected to the steering wheel. Instead, the brake pedal, gas pedal, and steering wheel could all just be electronic sensors that send computerized commands over the CAN bus network to electric motors elsewhere in the vehicle that carry out those commands. Toyota’s electronic throttle control system has already made some headlines as part of a series of unintended acceleration lawsuits that resulted in 16 deaths, 243 injuries, a motorist released from jail, and a $1.2 billion fine.

This issue goes much deeper than the types of software mistakes that can cause a car to malfunction on its own. As we’ve seen with much of the software connected to the Internet, including some other systems that can have real-world (and sometimes very messy) consequences, it is the malicious hackers that can cause the most problems. Security researchers have already been looking into these sorts of possibilities and have separately demonstrated the ability to gain access to in-car networks from a remote location and affect a vehicle’s braking, steering, and acceleration (among other things) once they gain access to the in-car network.

Other attacks like location tracking and eavesdropping on a vehicle’s occupants via hands-free communication microphones are also possible, but they pale in comparison to the potentially fatal consequences of interference with the vehicle controls. Presentations at the annual Black Hat Conference and DEF CON security conferences this month have also covered topics related to automotive network and computer security, while a group in China is offering a prize of $10,000 to anyone who can gain remote access to a Tesla’s on-board operating system.

Although some of the media reports on this topic are being dismissed within the information security community as “stunt hacking” (sensationalist stories based on hacks conducted in unrealistic conditions) and manufacturers are quick to state that their car systems have safety checks built in, it is clear that the building blocks for a real-world attack are being built and assembled. The firmware manipulation techniques demonstrated at DEF CON earlier this month could be used to override or eliminate the safety checks built in by the manufacturers, and it is only a matter of time before the techniques that are being used to remotely access cars are combined with the techniques to manipulate the controls.

Many ways to attack

For an attacker, getting access to a car’s network is not as hard as it may initially seem. The most obvious attack point would be the On-Board Diagnostics connector that is usually located in a discrete spot under a vehicle’s steering wheel where a small and cheap micro controller could be connected. More interesting attacks could be launched via malware contained on CDs, DVDs, or USB devices loaded into the vehicle’s infotainment system. Moving into the wireless realm, many cars come equipped with Bluetooth or WiFi connectivity for smartphones and other devices within the vehicle.All of these attack vectors would require the attacker to be in or near the target vehicle, but services like GM’s OnStar, BMW’s Assist, and others utilize mobile cellular connections to connect vehicles to the outside world. New smartphone apps that allow vehicle owners to interface with their cars remotely can open up these interfaces essentially to anyone on the Internet. It’s not too far-fetched to imagine that a few years from now bored Chinese hackers could spend their downtime crashing cars instead of trying to cause trouble at water treatment plants.

Motor vehicles have been built with mechanical and hydraulic linkages for over a century, and the basic safety principles for those types of systems are well understood. Designing reliable software for complex vehicles is a fairly new discipline that is only understood by a few companies (and even they make mistakes). Malfunctions or outside interference with operating vehicles can easily have fatal consequences, and the increasing use of networked control systems connected to the outside world increases the likelihood of accidental or malicious incidents.

The developers of the electronic systems in our vehicles would do well to heed the the saying “with great power comes great responsibility.” As we’ve seen with both Toyota and GM’s recent troubles, safety issues can bring heavy financial consequences for manufacturers. Congress is starting to pay attention to the issue of car hacking as well, and it will likely only take one high-profile incident to provoke regulatory action.

Tesla Motors has already shaken up the industry by bringing its Silicon Valley approach to the automobile business and continues with this approach by actively soliciting information from the public on security vulnerabilities in its vehicles and publicly posting a “Hall of Fame” for security researchers who have assisted them. Perhaps this is part of the future, manufacturers working closer with their customers to find and address issues.

As Google experiments with some of the first realistic self-driving cars, it isn’t too far fetched to imagine them following the same path as Tesla when it comes to working with security researchers, especially in light of Google’s existing bug bounty programs. In any case, one habit of Silicon Valley that we can be almost assured won’t carry over to the automotive world is the practice of disclaiming liability for damages from the improper operation of software; the Toyota case has shown us that those days are already over. Who knows? Before long, it may be Silicon Valley looking to Detroit for advice on how to handle product liability concerns.

As a footnote, many of the issues raised here are applicable to other industries outside the automotive sector as well (software vulnerabilities in medical devices and industrial control systems have been getting quite a bit of attention as of late). But it’s hard to imagine any other industry that is as integral to the national (and global) economy, whose products are used more frequently by such a large proportion of the population, and the correct operation of which carries life-and-death consequences.

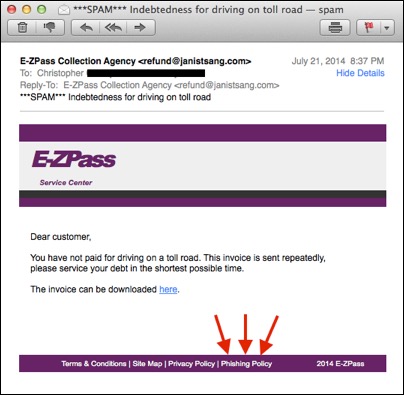

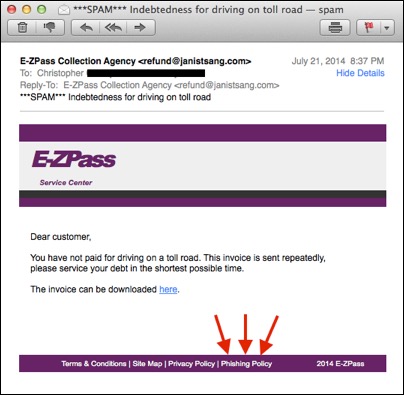

Phishing Policies

22 07, 14

Just got this in my spam box, quite possibly my favorite phishing email of all time. It looks like a pretty good knock-off of an E-ZPass email considering that it was all done without images (although they could have done a little better on the text). The best part is the link to the “Phishing Policy” at the bottom of the email. I don’t dare click on it to see where the link actually goes but it’s nice to know the bad guys have a sense of humor too.

Vigilante Justice on the Digital Frontier

14 07, 14

As published in Wall Street and Technology:

This is a story about Microsoft and a company called Vitalwerks, but first lets go through a fictional scenario.

Let's say you own a number of office buildings. Unbeknownst to you, some of your tenants are engaged in criminal activity. In particular, a crime ring operating out of some of these offices steals cars and uses them to rob banks. One day, you start getting angry calls from your tenants (the ones involved in legitimate businesses), because they are all locked out of their offices. You come to discover that General Motors, upset that its products are being stolen and used in bank robberies, has managed to identify the crime ring. However, rather than contacting you (the landlord), so that you can evict the offenders, or getting law enforcement involved to apprehend the criminals, the company spent months applying for a court order allowing it to seize the crime ring's offices on its own.

Unfortunately for you and your legitimate tenants, instead of locking down the individual offices used by the criminals, General Motors seized and locked down your entire office buildings.

This scenario seems absurd on so many levels. Why allow the criminals to operate with impunity for months instead of taking immediate action? Why not contact the landlord or law enforcement for help, instead of resorting to a secret seizure order? Why seize entire buildings, rather than the individual offices used by the suspects? Why is a third-party like General Motors even involved to this degree? How could a court ever agree that any of this was a good idea and issue an order allowing it? Despite the court order, the whole things reeks of vigilante justice.

As absurd as this all seems, it actually happened on June 30, only it was all online. The criminals were distributing malware. The landlord was a hosting company called Vitalwerks. The targets of the seizure were Vitalwerks' Internet domain names, and the company doing the seizing was Microsoft.

Vitalwerks' domains were handed over to Microsoft as a result of a court order. This transfer is done by domain registrars who actually control the Internet's domain name resolution infrastructure. It does not require any notifications to or actions on the part of the target. In theory, Microsoft's goal was to use its control of the domains to "sinkhole" the subdomains used by the malware (redirecting them to a system that doesn't distribute malware). However, because what Microsoft is calling a small technical error, it actually interrupted service for millions of Vitalwerks' legitimate customers. It took days before service was completely restored.

The seizure does seem to have affected criminal operations. Kaspersky reports that 25% of the APT groups it was tracking have been affected. This raises the question of whether the end justifies the means. In this case, the means was a tricky technical maneuver that went awry and affected millions of hosts for days in an industry where providers strive to have as many nines in their uptime as possible.

This isn't the only instance of this phenomenon, either. The tactic of hijacking domains to interrupt malware traffic has been used for a few years and is quickly becoming a favorite for Microsoft's Digital Crimes Unit. Of course, given some of the tactics used by law enforcement agencies (such as taking hundreds of unrelated servers from co-location facilities in raids), the seizure of a few domains might actually be the lesser of two evils.

Unlike some of the "bulletproof hosting" providers operating out of Eastern Europe, where a forced takeover may be the only way to block malicious traffic, Vitalwerks is based in the US, where the law doesn't look too kindly on organizations that intentionally harbor hackers. In this case, Vitalwerks says it was unaware of the malware that was utilizing its service, and that it would have immediately blocked the offending accounts if it had known about them. The company says it has actually worked with Microsoft to block malicious accounts in the past, so it isn't sure why anyone would go through the time and effort to get a court order (allowing the malware to operate the whole time) when it could have acted immediately.

On the other side of the argument, the type of hosting service provided by Vitalwerks is easily abused (though these services do have legitimate purposes). Microsoft's Digital Crimes Unit contends that Vitalwerks was not doing enough on its own to prevent abuse.

It seems that we are dealing with the age-old consequences of frontier justice moved from the Wild West to the digital realm. Private organizations are taking law enforcement into their own hands, because the government hasn't been able to keep up. Innocent bystanders are being hurt in the process. Companies that rely on their Internet presence to do business may want to be careful about the providers they choose. They risk getting caught in the crossfire if criminals happen to be in the vicinity.

This is a story about Microsoft and a company called Vitalwerks, but first lets go through a fictional scenario.

Let's say you own a number of office buildings. Unbeknownst to you, some of your tenants are engaged in criminal activity. In particular, a crime ring operating out of some of these offices steals cars and uses them to rob banks. One day, you start getting angry calls from your tenants (the ones involved in legitimate businesses), because they are all locked out of their offices. You come to discover that General Motors, upset that its products are being stolen and used in bank robberies, has managed to identify the crime ring. However, rather than contacting you (the landlord), so that you can evict the offenders, or getting law enforcement involved to apprehend the criminals, the company spent months applying for a court order allowing it to seize the crime ring's offices on its own.

Unfortunately for you and your legitimate tenants, instead of locking down the individual offices used by the criminals, General Motors seized and locked down your entire office buildings.

This scenario seems absurd on so many levels. Why allow the criminals to operate with impunity for months instead of taking immediate action? Why not contact the landlord or law enforcement for help, instead of resorting to a secret seizure order? Why seize entire buildings, rather than the individual offices used by the suspects? Why is a third-party like General Motors even involved to this degree? How could a court ever agree that any of this was a good idea and issue an order allowing it? Despite the court order, the whole things reeks of vigilante justice.

As absurd as this all seems, it actually happened on June 30, only it was all online. The criminals were distributing malware. The landlord was a hosting company called Vitalwerks. The targets of the seizure were Vitalwerks' Internet domain names, and the company doing the seizing was Microsoft.

Vitalwerks' domains were handed over to Microsoft as a result of a court order. This transfer is done by domain registrars who actually control the Internet's domain name resolution infrastructure. It does not require any notifications to or actions on the part of the target. In theory, Microsoft's goal was to use its control of the domains to "sinkhole" the subdomains used by the malware (redirecting them to a system that doesn't distribute malware). However, because what Microsoft is calling a small technical error, it actually interrupted service for millions of Vitalwerks' legitimate customers. It took days before service was completely restored.

The seizure does seem to have affected criminal operations. Kaspersky reports that 25% of the APT groups it was tracking have been affected. This raises the question of whether the end justifies the means. In this case, the means was a tricky technical maneuver that went awry and affected millions of hosts for days in an industry where providers strive to have as many nines in their uptime as possible.

This isn't the only instance of this phenomenon, either. The tactic of hijacking domains to interrupt malware traffic has been used for a few years and is quickly becoming a favorite for Microsoft's Digital Crimes Unit. Of course, given some of the tactics used by law enforcement agencies (such as taking hundreds of unrelated servers from co-location facilities in raids), the seizure of a few domains might actually be the lesser of two evils.

Unlike some of the "bulletproof hosting" providers operating out of Eastern Europe, where a forced takeover may be the only way to block malicious traffic, Vitalwerks is based in the US, where the law doesn't look too kindly on organizations that intentionally harbor hackers. In this case, Vitalwerks says it was unaware of the malware that was utilizing its service, and that it would have immediately blocked the offending accounts if it had known about them. The company says it has actually worked with Microsoft to block malicious accounts in the past, so it isn't sure why anyone would go through the time and effort to get a court order (allowing the malware to operate the whole time) when it could have acted immediately.

On the other side of the argument, the type of hosting service provided by Vitalwerks is easily abused (though these services do have legitimate purposes). Microsoft's Digital Crimes Unit contends that Vitalwerks was not doing enough on its own to prevent abuse.

It seems that we are dealing with the age-old consequences of frontier justice moved from the Wild West to the digital realm. Private organizations are taking law enforcement into their own hands, because the government hasn't been able to keep up. Innocent bystanders are being hurt in the process. Companies that rely on their Internet presence to do business may want to be careful about the providers they choose. They risk getting caught in the crossfire if criminals happen to be in the vicinity.

Bank Fraud: It’s Not Personal, Just Business

24 06, 14

As published in Wall Street and Technology:

Less publicized (but nonetheless costly) incidents of fraud, questions of liability, and mixed success in court complicate the allocation of security resources.

High-profile breaches of consumer data have been in the news lately, with Neiman Marcus, Michael's, and Target each losing hundreds of thousands to millions of payment card details. As of last week it looks as if we will be able to add P.F. Chang’s to that list as well.

Much of the media coverage of these events has revolved around the impact on consumers and what consumers should do to protect themselves, but the reality of these breaches is that the consumers are the least likely to be affected: Federal law limits liability for fraudulent credit or debit card purchases to $50 in most cases (with the condition that the loss or theft of the card is reported promptly in the case of debit cards). The real impact of these breaches has been on the companies that have been compromised. Target reported $61 million in total breach expenses during the quarter of the breach, and this number is sure to grow as time goes on.

There is another type of financial fraud that is hitting companies as well: wire transfer fraud. This type of fraud costs approximately $1 billion per year but generally doesn’t get the media coverage we have seen with recent personal information breaches, perhaps because it doesn’t involve millions of individuals’ payment card numbers or because breach notifications usually aren’t required if a consumer’s personal information isn’t lost.

The ploy is fairly simple, an attacker gains access to a commercial bank account, wires as much money as possible to another bank account, and withdraws the stolen money before the unauthorized transfer is noticed. Often the recipient bank accounts and withdrawals are handled by unwitting “mules” who answer the “Work From Home!” ads that seem to be plastered all over the Internet and on telephone poles across the country. The mules believe they are working for a legitimate company handling office finances when in reality they are withdrawing the stolen money and forwarding it to the overseas (usually somewhere in Eastern Europe) masterminds behind the scheme.

Unlike personal consumer bank accounts, which fall under FDIC regulations and have the same federal liability limits as debit cards ($50 if the bank is notified within 2 days and $500 if the bank is notified within 60 days), there is essentially unlimited liability for commercial bank accounts. It is entirely possible for an entire bank account to be cleaned out in a matter of hours. In 2009 Experi-Metal Inc., a Michigan based company, had $5.2 million wired out of its account at Comerica in a single day. The bank was able to recover most of the money because the transactions had been detected by fraud-alerting algorithms, but Experi-Metal was still left short by $561,000.

Experi-Metal’s story is fairly typical, most victims are left with losses in excess of $100,000. This seems like a pittance compared to the Target losses, but it could be a devastating blow for a small or midsized business with a much smaller revenue stream than the $21.5 billion Target reported during the same quarter as the recent breach. These attacks are happening regularly, and they aren’t just targeting businesses: Public schools, libraries, universities, and non-profits have all been victimized in this manner.

Most banks accept no liability for the missing money, because the breaches are occurring on the customer’s computer systems, not the bank's. These can range from a simple phishing attack in which an email purporting to be from the bank attempts to trick an unwitting user into directly revealing his or her banking passwords to complex botnets made up of malware-infected computers around the world waiting to capture these credentials.

Law enforcement does try to break up these fraud networks when they can, but it can take years. With many of the perpetrators targeting US businesses but operating out of foreign countries, it can be difficult for US law enforcement to find the masterminds behind the operation and get the quick cooperation they would need to effect any meaningful arrests. Businesses certainly shouldn’t hold out any hope that these modern-day bank robbers will be caught and their money returned.

Some businesses have tried to fight back against the banks in court with mixed success. Patco Construction Co. of Maine lost $588,000 in 2009 and, after repeatedly losing in lower courts, was able to win a judgment from the 1st Circuit Court of Appeals in July 2012 forcing the bank to cover tits losses. On the other hand, Choice Escrow and Land Title LLC of Missouri also lost $440,000 in 2009, and on June 11, 2014, the 8th Circuit Court of Appeals ruled that not only was the bank not responsible for the losses, but that the bank can pursue Choice Escrow to pay for its legal defense costs. Given the potential losses from a breach and the expensive, uncertain, and lengthy nature of attempting to recover funds from a bank it is clear that businesses need to focus on protecting themselves from fraudulent transfers.

Malware and botnets are an enormous threat on the Internet today, and many of them are designed to steal financial details in order to facilitate wire transfer fraud. The ZeuS botnet alone (the same piece of malware that caused the Patco breach described above) is estimated to have stolen $70 million over its lifetime. NTT Com Security’s Global Threat Intelligence Report shows that botnets were responsible for the largest proportion of attacks happening on the Internet in 2013 with 34% of the total. Disturbingly, the same report also shows that 54% to 71% of malware is not detected by antivirus software, which highlights an underlying security issue: Installing antivirus and tossing a firewall on the network is not enough to prevent these types of attacks.

Real network security requires building the capability to monitor a network and respond to attacks. We saw this with the Target breach where, despite spending $1.6 million on FireEye network monitoring software, Target managed to ignore the alerts it generated based on the malware attacking their network. We saw this again with the Neiman Marcus breach where 60,000 alerts were ignored over a three-and-a-half month period. If large companies with multimillion-dollar security budgets can’t protect themselves from malware, then the prospects would seem exceedingly bleak for the small and midsized companies that are being victimized by wire transfer fraud.

In spite of all this, there are low-cost and remarkably simple steps we can take to help significantly reduce the chances of a malware attack compromising a bank account. It can be as simple as isolating the computers used to access bank accounts. Most malware attacks rely on the fact that a single workstation is often used for multiple purposes: If a user is browsing the web he opens his workstation to drive-by download attacks; reading email opens the workstation to malware contained within email attachments; and file-sharing (whether it is a USB memory stick, a corporate shared network drive, or a peer-to-peer network) opens workstations to direct cross-contamination from other infected systems it interacts with.

On the other hand, if a few designated workstations, and these workstations alone, are used solely for the purpose of processing bank transfers to the exclusion of web browsing, email, and all of the other activities that could bring malware onto the system, then the risks of infection would be drastically reduced -- even moreso if these workstations could be firewalled off from the rest of the network or given their own dedicated Internet connections. The cost of a cable modem and a small firewall would almost certainly be a tiny fraction of the potential cost of a single fraudulent transfer.

Phishing attacks serve to illustrate this point further: There is no technical solution that can effectively stop a user who has been duped from sending out passwords; we must instead rely on training and awareness to make sure that individuals who hold the digital keys to a company’s bank accounts are aware of the threats they are facing and how they operate. If more people have the passwords to initiate bank transfers, then there are more people who could potentially leak that information. Keeping the key holders to a minimum allows companies to focus their training and awareness efforts on those few key individuals who matter.

We must also not forget the banks themselves. Many offer enhanced security measures for wire transfers that businesses just aren’t using. In the case of Choice Escrow, mentioned above, the bank offered a system where two passwords would be required, one to approve a wire transfer and another to release the transfer. In this case Choice Escrow chose not to use those dual controls. We have no way to know if using dual controls would have made a difference in the breach or the court case, but it is certainly telling that an easy-to-use security feature was not being employed. There are likely many companies that are not leveraging all the security tools the banks are providing for them, simply for the sake of convenience.

The ultimate liability solution may go beyond technology as well. The ability for hackers to launch fraudulent wire transfers seems to be under the radar of most businesses, as is the lack of liability that the banks accept. At least one bank, JPMorgan Chase & Co, does offer insurance on commercial accounts. Perhaps as more businesses become aware of the underlying risks in commercial bank accounts they will move to banks that offer more robust protections and instigate a change in the banking industry. Or perhaps we are just waiting for our “Target” moment when a major publicly traded corporation finds tens of millions of dollars missing from its bank account and makes the front-page news.

Less publicized (but nonetheless costly) incidents of fraud, questions of liability, and mixed success in court complicate the allocation of security resources.

High-profile breaches of consumer data have been in the news lately, with Neiman Marcus, Michael's, and Target each losing hundreds of thousands to millions of payment card details. As of last week it looks as if we will be able to add P.F. Chang’s to that list as well.

Much of the media coverage of these events has revolved around the impact on consumers and what consumers should do to protect themselves, but the reality of these breaches is that the consumers are the least likely to be affected: Federal law limits liability for fraudulent credit or debit card purchases to $50 in most cases (with the condition that the loss or theft of the card is reported promptly in the case of debit cards). The real impact of these breaches has been on the companies that have been compromised. Target reported $61 million in total breach expenses during the quarter of the breach, and this number is sure to grow as time goes on.

There is another type of financial fraud that is hitting companies as well: wire transfer fraud. This type of fraud costs approximately $1 billion per year but generally doesn’t get the media coverage we have seen with recent personal information breaches, perhaps because it doesn’t involve millions of individuals’ payment card numbers or because breach notifications usually aren’t required if a consumer’s personal information isn’t lost.

The ploy is fairly simple, an attacker gains access to a commercial bank account, wires as much money as possible to another bank account, and withdraws the stolen money before the unauthorized transfer is noticed. Often the recipient bank accounts and withdrawals are handled by unwitting “mules” who answer the “Work From Home!” ads that seem to be plastered all over the Internet and on telephone poles across the country. The mules believe they are working for a legitimate company handling office finances when in reality they are withdrawing the stolen money and forwarding it to the overseas (usually somewhere in Eastern Europe) masterminds behind the scheme.

Unlike personal consumer bank accounts, which fall under FDIC regulations and have the same federal liability limits as debit cards ($50 if the bank is notified within 2 days and $500 if the bank is notified within 60 days), there is essentially unlimited liability for commercial bank accounts. It is entirely possible for an entire bank account to be cleaned out in a matter of hours. In 2009 Experi-Metal Inc., a Michigan based company, had $5.2 million wired out of its account at Comerica in a single day. The bank was able to recover most of the money because the transactions had been detected by fraud-alerting algorithms, but Experi-Metal was still left short by $561,000.

Experi-Metal’s story is fairly typical, most victims are left with losses in excess of $100,000. This seems like a pittance compared to the Target losses, but it could be a devastating blow for a small or midsized business with a much smaller revenue stream than the $21.5 billion Target reported during the same quarter as the recent breach. These attacks are happening regularly, and they aren’t just targeting businesses: Public schools, libraries, universities, and non-profits have all been victimized in this manner.

Most banks accept no liability for the missing money, because the breaches are occurring on the customer’s computer systems, not the bank's. These can range from a simple phishing attack in which an email purporting to be from the bank attempts to trick an unwitting user into directly revealing his or her banking passwords to complex botnets made up of malware-infected computers around the world waiting to capture these credentials.

Law enforcement does try to break up these fraud networks when they can, but it can take years. With many of the perpetrators targeting US businesses but operating out of foreign countries, it can be difficult for US law enforcement to find the masterminds behind the operation and get the quick cooperation they would need to effect any meaningful arrests. Businesses certainly shouldn’t hold out any hope that these modern-day bank robbers will be caught and their money returned.

Some businesses have tried to fight back against the banks in court with mixed success. Patco Construction Co. of Maine lost $588,000 in 2009 and, after repeatedly losing in lower courts, was able to win a judgment from the 1st Circuit Court of Appeals in July 2012 forcing the bank to cover tits losses. On the other hand, Choice Escrow and Land Title LLC of Missouri also lost $440,000 in 2009, and on June 11, 2014, the 8th Circuit Court of Appeals ruled that not only was the bank not responsible for the losses, but that the bank can pursue Choice Escrow to pay for its legal defense costs. Given the potential losses from a breach and the expensive, uncertain, and lengthy nature of attempting to recover funds from a bank it is clear that businesses need to focus on protecting themselves from fraudulent transfers.

Malware and botnets are an enormous threat on the Internet today, and many of them are designed to steal financial details in order to facilitate wire transfer fraud. The ZeuS botnet alone (the same piece of malware that caused the Patco breach described above) is estimated to have stolen $70 million over its lifetime. NTT Com Security’s Global Threat Intelligence Report shows that botnets were responsible for the largest proportion of attacks happening on the Internet in 2013 with 34% of the total. Disturbingly, the same report also shows that 54% to 71% of malware is not detected by antivirus software, which highlights an underlying security issue: Installing antivirus and tossing a firewall on the network is not enough to prevent these types of attacks.

Real network security requires building the capability to monitor a network and respond to attacks. We saw this with the Target breach where, despite spending $1.6 million on FireEye network monitoring software, Target managed to ignore the alerts it generated based on the malware attacking their network. We saw this again with the Neiman Marcus breach where 60,000 alerts were ignored over a three-and-a-half month period. If large companies with multimillion-dollar security budgets can’t protect themselves from malware, then the prospects would seem exceedingly bleak for the small and midsized companies that are being victimized by wire transfer fraud.

In spite of all this, there are low-cost and remarkably simple steps we can take to help significantly reduce the chances of a malware attack compromising a bank account. It can be as simple as isolating the computers used to access bank accounts. Most malware attacks rely on the fact that a single workstation is often used for multiple purposes: If a user is browsing the web he opens his workstation to drive-by download attacks; reading email opens the workstation to malware contained within email attachments; and file-sharing (whether it is a USB memory stick, a corporate shared network drive, or a peer-to-peer network) opens workstations to direct cross-contamination from other infected systems it interacts with.

On the other hand, if a few designated workstations, and these workstations alone, are used solely for the purpose of processing bank transfers to the exclusion of web browsing, email, and all of the other activities that could bring malware onto the system, then the risks of infection would be drastically reduced -- even moreso if these workstations could be firewalled off from the rest of the network or given their own dedicated Internet connections. The cost of a cable modem and a small firewall would almost certainly be a tiny fraction of the potential cost of a single fraudulent transfer.

Phishing attacks serve to illustrate this point further: There is no technical solution that can effectively stop a user who has been duped from sending out passwords; we must instead rely on training and awareness to make sure that individuals who hold the digital keys to a company’s bank accounts are aware of the threats they are facing and how they operate. If more people have the passwords to initiate bank transfers, then there are more people who could potentially leak that information. Keeping the key holders to a minimum allows companies to focus their training and awareness efforts on those few key individuals who matter.

We must also not forget the banks themselves. Many offer enhanced security measures for wire transfers that businesses just aren’t using. In the case of Choice Escrow, mentioned above, the bank offered a system where two passwords would be required, one to approve a wire transfer and another to release the transfer. In this case Choice Escrow chose not to use those dual controls. We have no way to know if using dual controls would have made a difference in the breach or the court case, but it is certainly telling that an easy-to-use security feature was not being employed. There are likely many companies that are not leveraging all the security tools the banks are providing for them, simply for the sake of convenience.

The ultimate liability solution may go beyond technology as well. The ability for hackers to launch fraudulent wire transfers seems to be under the radar of most businesses, as is the lack of liability that the banks accept. At least one bank, JPMorgan Chase & Co, does offer insurance on commercial accounts. Perhaps as more businesses become aware of the underlying risks in commercial bank accounts they will move to banks that offer more robust protections and instigate a change in the banking industry. Or perhaps we are just waiting for our “Target” moment when a major publicly traded corporation finds tens of millions of dollars missing from its bank account and makes the front-page news.

PCI-DSS 3.0 Helps Merchants Defend Against Emerging Threats

15 06, 14

Protecting sensitive personal data continues to be a priority for merchants and businesses that operate in the payment card industry. With the release of PCI-DSS 3.0 many organizations that are already PCI compliant or are working toward becoming PCI compliant are wondering what these changes will mean to their organization.

Let’s take a look at what has changed and the impact this will have on how organizations approach PCI compliance.

Merchants and businesses should find that PCI-DSS 3.0 is easier and more intuitive to work with than earlier versions. The main impact of the changes includes:

What new requirements are included in PCI-DSS 3.0?